We introduce Audit & Repair, the first iterative audit-and-repair loop for multi-frame consistency that operates independently of the diffusion backbone, and is compatible with both Stable Diffusion and Flux generated stories. Our method identifies visual inconsistencies—such as mismatched clothing, character identity or hairstyle changes—across story panels and refines them using agent-guided editing. The corrected stories preserve narrative coherence and visual consistency, improving character fidelity throughout the sequence.

Abstract

Story visualization has become a popular task where visual scenes are generated to depict a narrative across multiple panels. A central challenge in this setting is maintaining visual consistency, particularly in how characters and objects persist and evolve throughout the story. Despite recent advances in diffusion models, current approaches often fail to preserve key character attributes, leading to incoherent narratives. In this work, we propose a collaborative multi-agent framework that autonomously identifies, corrects, and refines inconsistencies across multi-panel story visualizations. The agents operate in an iterative loop, enabling fine-grained, panel-level updates without re-generating entire sequences. Our framework is model-agnostic and flexibly integrates with a variety of diffusion models, including rectified flow transformers such as Flux and latent diffusion models such as Stable Diffusion. Quantitative and qualitative experiments show that our method outperforms prior approaches in terms of multi-panel consistency.

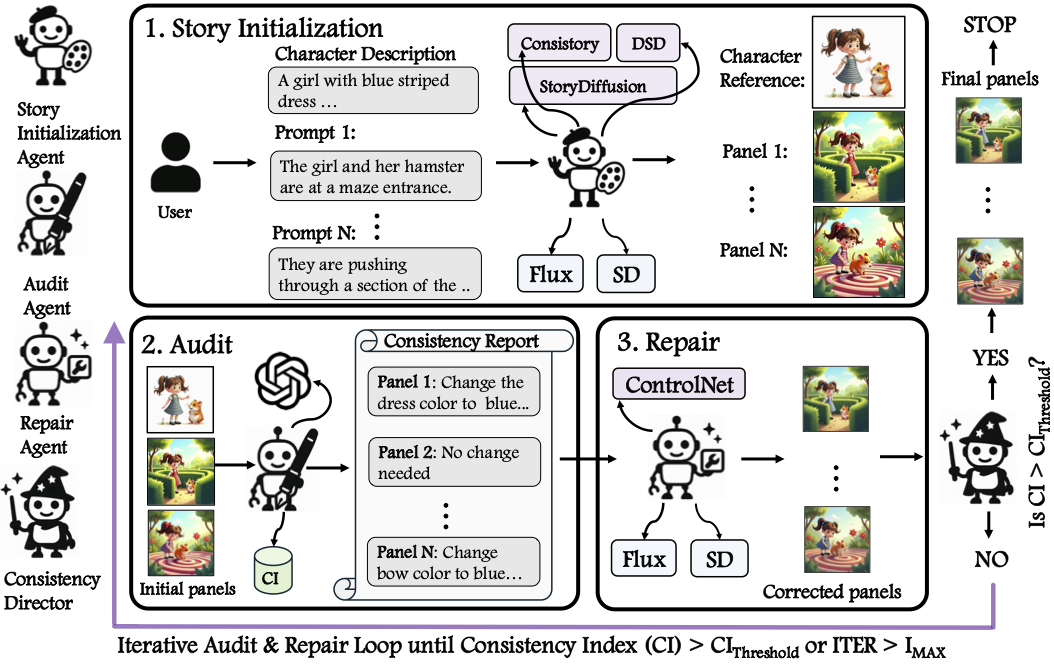

Method

Our framework operates as a collaborative multi-agent system with access to a shared memory that maintains a dynamic Consistency Index (CI), the current panel set, and the latest consistency report. First, Story Initialization Agent, which takes a user-provided sequence of story prompts and character descriptions, and generates initial story panels using a set of off-the-shelf story visualization methods, including both Flux and SD-based models. Once the initial panels are generated, the Audit Agent evaluates each panel using a VLM, updates the CI, and produces a detailed consistency report. This is followed by the Repair Phase, where the Repair Agent applies localized edits to inconsistent panels using editing tools such as Flux-ControlNet. The Consistency Director Agent oversees the entire process, iteratively triggering the Audit and Repair phases until the CI reaches a predefined threshold or a maximum number of refinement iterations is completed.

Qualitative Results

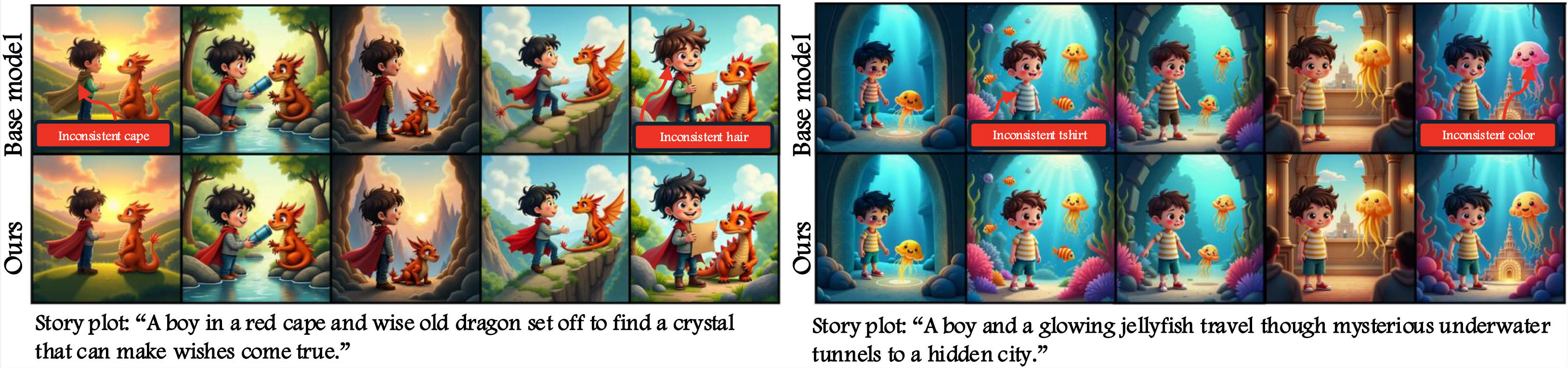

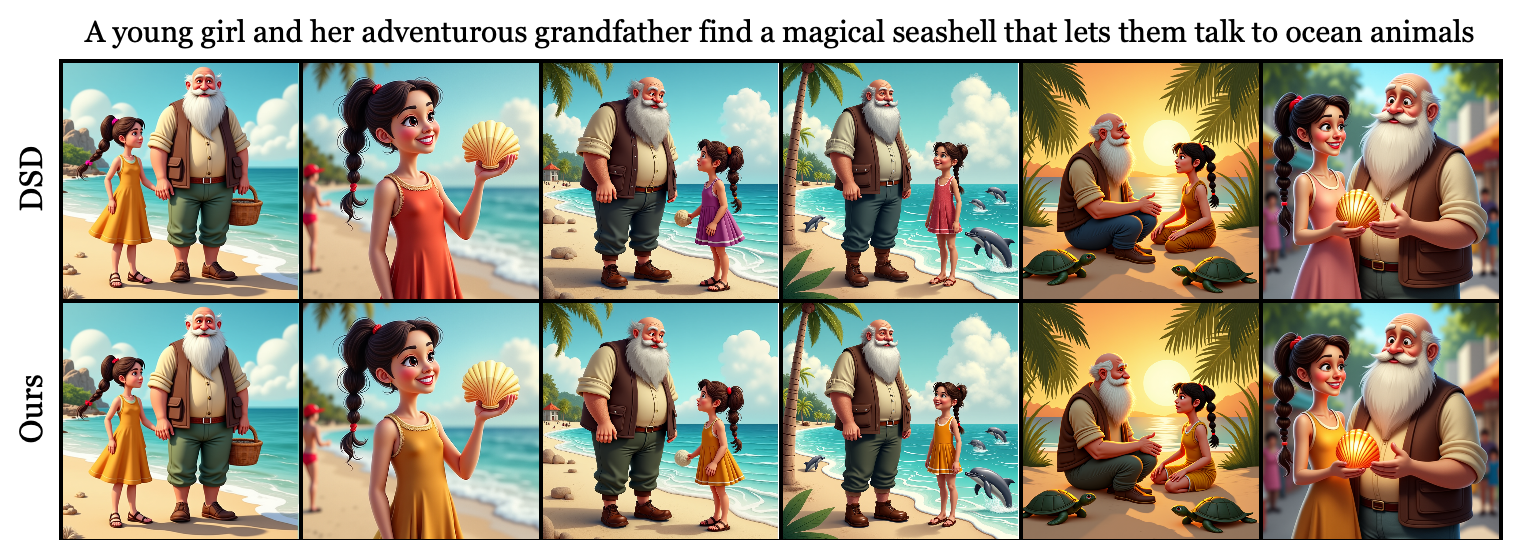

Qualitative results for Audit & Repair. Our method identifies visual inconsistencies—such as mismatched clothing, character identity or hairstyle changes—across story panels and refines them using agent-guided editing. The corrected stories preserve narrative coherence and visual consistency, improving character fidelity throughout the sequence.

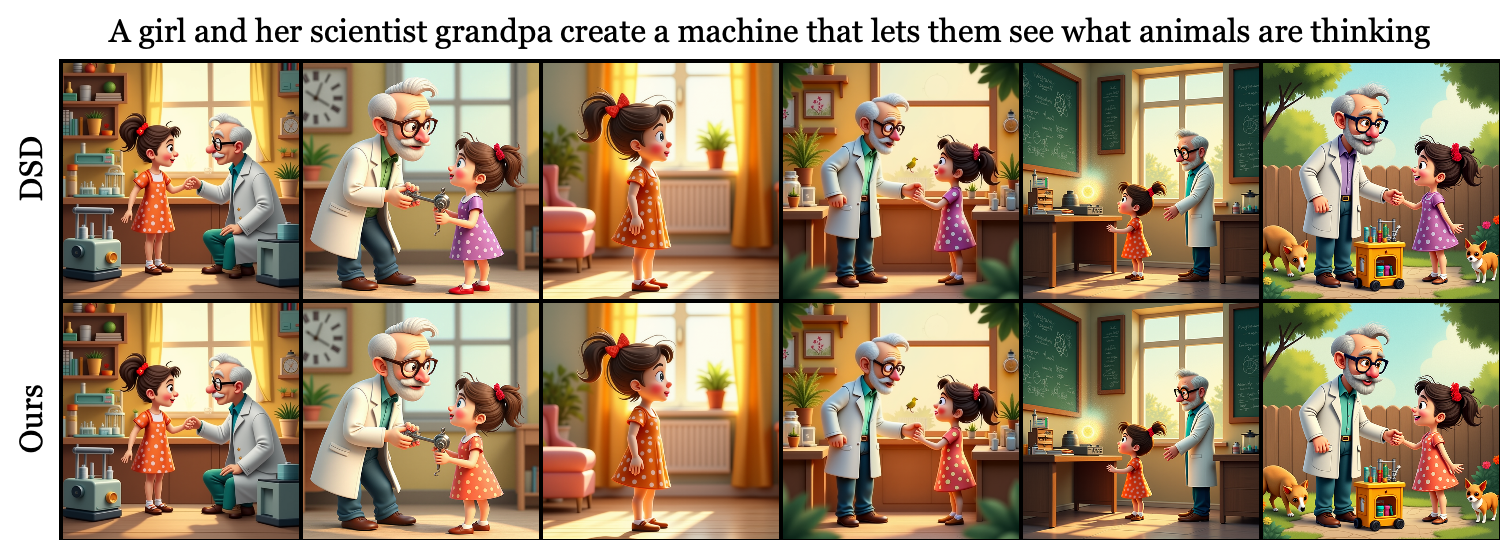

Inconsistency correction results on the DSD method, illustrated through qualitative examples produced by our agentic framework.

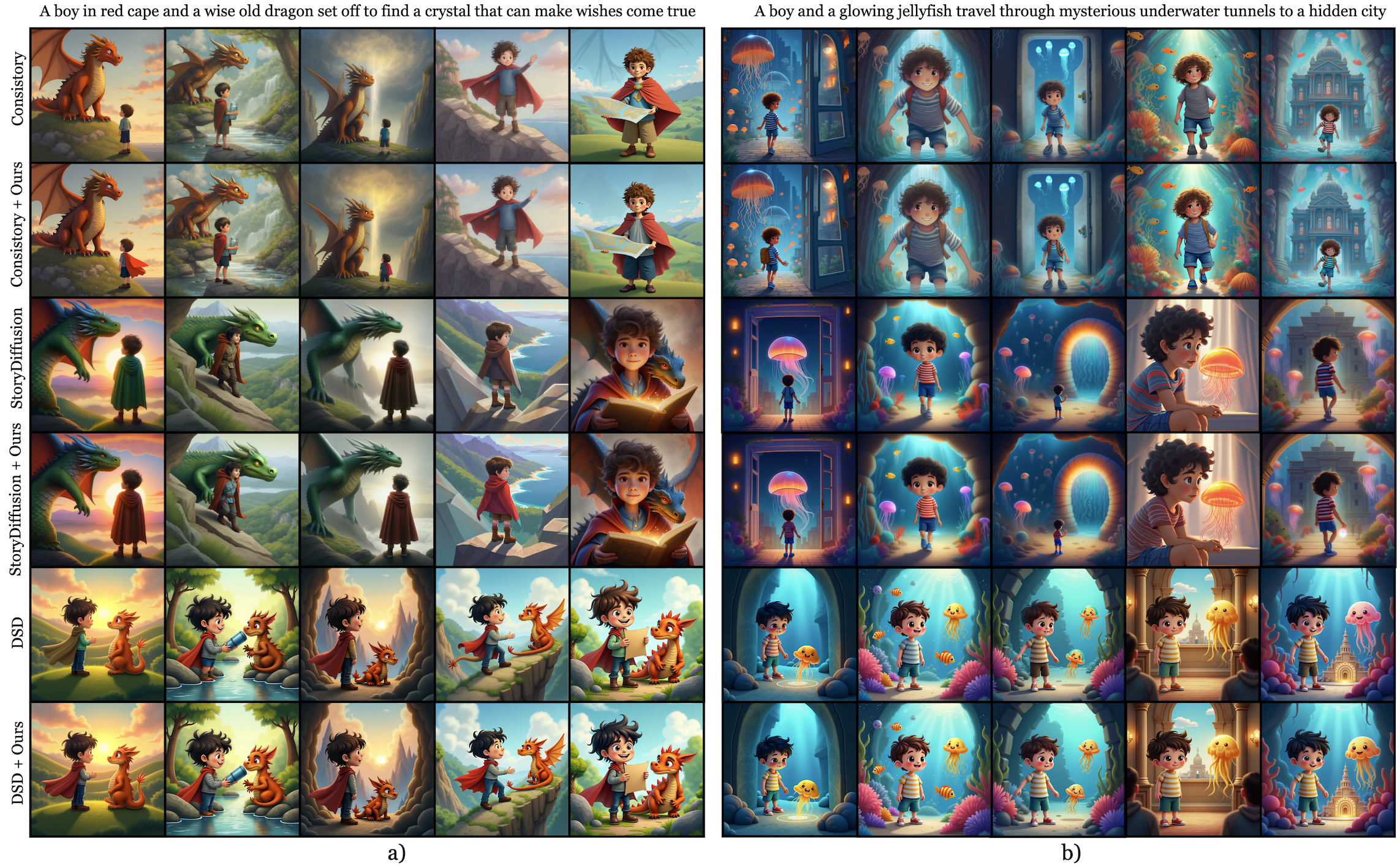

Qualitative Comparison

Qualitative comparison of our method with state-of-the-art story visualization methods, including StoryDiffusion, StoryGen, ConsiStory, AutoStudio, and DSD. Our method outperforms existing approaches by preserving consistent visual elements—such as character appearance, clothing, and identity—across all panels. In contrast, prior methods frequently exhibit inconsistencies and blending artifacts that compromise story coherence and the visual identity of characters.

Additional Results

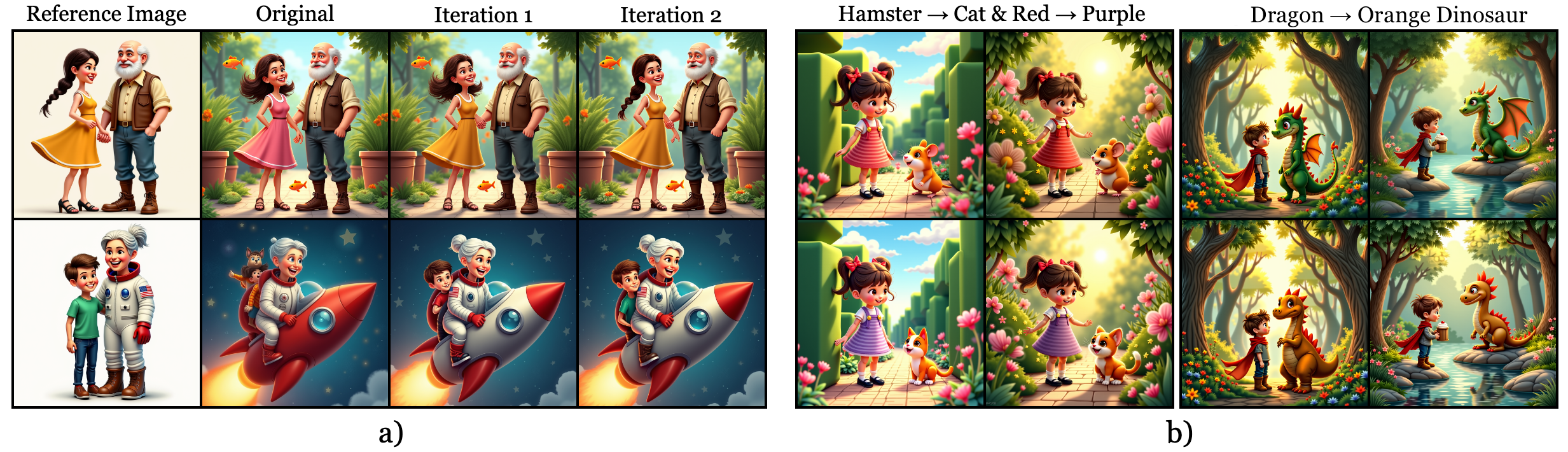

a) Iterative Refinement. Our framework progressively improves consistency across iterations—for instance, correcting dress color in Iteration 1 and hairstyle in Iteration 2, or adjusting a rocket’s design to match the prompt.

b) User-in-the-loop Correction. Users can interactively guide edits, enabling both fine-grained adjustments (e.g. dress color) and broader changes (e.g. replacing a hamster with a cat), demonstrating the system’s flexibility and controllability.

BibTeX

@misc{akdemir2025auditrepairagentic,

title={Audit & Repair: An Agentic Framework for Consistent Story Visualization in Text-to-Image Diffusion Models},

author={Kiymet Akdemir and Tahira Kazimi and Pinar Yanardag},

year={2025},

eprint={2506.18900},

archivePrefix={arXiv},

primaryClass={cs.CV},

url={https://arxiv.org/abs/2506.18900},

}